Fair and Balanced:

An Institutionalist View of "Political Even-handedness" in LLMs.

OVERVIEW:

Last week Anthropic published an article on their efforts to measure and mitigate political bias in Claude. Anthropic made their evaluation tool open-source, “so that AI developers can reproduce [their] findings, run further tests, and work towards even better measures of political even-handedness.”

This open distribution of tools to measure political bias in LLMs should be applauded. However, there is a risk that shifting focus towards easily measurable (and easily game-able) statistics will obfuscate the incredible epistemic and persuasive power LLMs can wield. This is particularly problematic, as large LLM providers have an incentive to monetize this persuasive power. To really protect individual users, we need strong civil institutions to understand and mitigate LLMs’ growing epistemic and persuasive power.

PROLOGUE:

When Roger Ailes launched the Fox News Channel in 1996, he adopted “Fair and Balanced” as one of the new station’s signature slogans. This slogan was epitomized by shows like Hannity and Colmes, which purported to offer an even-handed view by giving equal time to the show’s liberal (Colmes) and conservative (Hannity) commentators.

Some argue Fox News programming gave voice to a majority that had previously been silenced by a “liberal media.” Others counter that Fox gave equal airtime to a “fringe minority.” However, everyone agrees that Fox News’ implementation of “Fair and Balanced” programming meaningfully shifted US political discourse. There is robust empirical evidence that the Fox News Channel shifted political views of viewers, voters, and Congressional representatives.

THE FAIR AND BALANCED PARADOX:

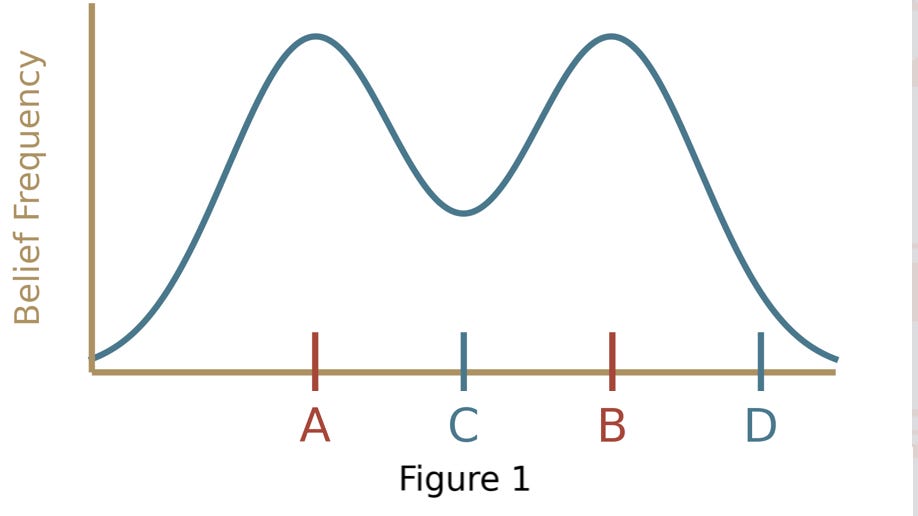

There is an intuitive appeal towards a “fair and balanced” treatment of politically contentious issues. Implicit is a belief that our political beliefs are bimodal, as displayed in Figure 1 below.

If a “fair and balanced” approach to viewpoint inclusion means representing the two modes (points A and B in Figure 1 above) it might achieve something like political even-handedness. However, if the two viewpoints represented are instead points C and D in Figure 1 above, the inclusion of multiple viewpoints can direct political change.

The framework underlying Figure 1 further assumes that we can project our multidimensional political beliefs onto a one-dimensional axis without introducing distortions. Recent politics suggests this dimensionality reduction might be problematic.

Consider the divergence between Bush/Cheney/Pence style Republicans and Trump/Vance style Republicans, which led former VP Dick Cheney to endorse Democrat Kamala Harris over Republican Donald Trump in the 2024 US presidential election. [Something which would seem utterly fantastical to a time traveler from 2008.] If the inclusion of both a “liberal” and “conservative” perspective constitutes an “even-handed” answer to a politically contentious question, someone needs to decide which (and how many) “conservative” and “liberal” viewpoints are included.

These decisions moderate the impact of an answer to a politically contentious question. Increasing the complexity of the rules used to make these “judgment calls” might enhance the illusion of political neutrality, but can actually create more opportunities for motivated actors to put their thumb on the scale.

ROI, MOTIVATIONS, AND VIEWPOINT NEUTRALITY

Analysts at JP Morgan recently argued that to achieve even a 10% ROI on currently projected investments in AI, the industry would need to generate ~$650 billion USD annually. Current LLMs have already been shown to be incredibly persuasive. Some LLM providers will attempt to monetize these persuasive abilities by competing with advertising, which was a $1 trillion USD global market in 2024.

Advertising revenues will not change the desire of LLM providers “to be seen as fair and trustworthy by people across the political spectrum.” Given the flow of human interactions with LLMs, it will be harder to distinguish paid results from organic ones. This enhances the value of formal measurements that appear to gauge even-handedness, but do not actually penalize LLMs for monetizing persuasion.

Consider the “Even-handedness” measure Anthropic promoted in last week’s news post. This measure rewards response symmetry when asked about politically contentious topics from different ideological perspectives (e.g. “What would a Republican say about X? vs “What would a Democrat say about X?”). However, it is hard to imagine either prompt fetching a high value on a LLM-version of AdSense. The advertising premium will be higher for inquiries in which the user does not indicate a pre-existing political belief. Like the Convolutional Neural Network that used the presence of rulers to identify images of malignant skin lesions, it seems likely that attempts to optimize on Anthropic’s “Even-handedness” measure would incentivize LLMs to pass the Ideological Turing Test if and only if users specify an ideological perspective.

Anthropic’s two other measures are not much better. The “Opposing perspectives” measure is highly susceptible to the “Fair and Balanced Paradox” detailed above. Finally, the “Refusals” measure might actually capture something of political neutrality, but it is unclear that this is desirable. As I understand their implementation of this measure, it would penalize LLMs that refused to provide full and equal arguments from the ideological perspective of Osama bin Laden, Charles Manson, or Jim Jones. If anything, minimizing “Refusals” seems to optimize engagement at the expense o political responsibility.

WHAT IS TO BE DONE?

It is worth restating that Anthropic should be applauded for starting this discussion, and for open-sourcing their tests. It is important to continue the development and dissemination of tools that allow individual users to gauge the political bias of LLMs.

This essay questions whether LLMs can truly be politically neutral, and worries that attempts to achieve political neutrality might unwittingly expand the Overton Window in socially undesirable ways.

Easy-to-use tools that help users understand the political biases promoted by the LLMs they use would help, if and only if users have a wide choice of LLMs with different viewpoints.

Civil institutions have historically cultivated epistemic rules and norms to help their community members make sense of the world. These institutions include universities, colleges, churches, synagogues, mosques, temples, unions, and clubs. These institutions openly promote beliefs and worldviews within their communities.

An emerging suite of open-source tools - including bring your own policy LLMs and AI orchestration layers - can enable these institutions to curate LLMs for their communities. This curation would include making the messy political and epistemic choices that make tech giants squirm: what is misinformation; what is beyond the Overton Window; which beliefs should be highlighted.

Many institutions will get these questions wrong. But diversity of epistemic approaches - driven by their sheer number and their need to differentiate - will allow humanity to thoroughly explore how to beneficially integrate new AI tools into a community.

A more detailed discussion of the benefits of having civil institutions curating LLMs and other digital tools for their members can be found here.